Not Subscribed?

A weekly letter for solopreneurs building one-person businesses—using automation, systems, and smart workflows to grow without teams, burnout, or bloat.

Share this article

You might have already been using some AI detectors, but now you wanna know how does AI detection work, right?

I’m by no means an AI research expert.

But I do have a Data Science certification which should come in useful in explaining this topic.

I’ll go over the following:

- What AI detection is

- What are the techniques involved in training these models

- Who uses them

- Other important FAQs

What Is AI Detection?

AI detection is a term that refers to the process of identifying whether a piece of text was written by a human or an artificial intelligence (AI) system.

It’s performed using classifiers trained on large datasets of human-written and AI-written texts on different topics.

These classifiers use machine learning algorithms and natural language processing techniques to analyze the text and assign a confidence score that indicates how likely it is that an AI wrote the text.

Why Is AI Text Detection Important?

AI text detection is important to ensure that information remains reliable, and it’s critical in areas like search engine optimization (SEO), academia, and law.

AI writing software tools are undoubtedly helpful and a must-use to be competitive.

But they are also notoriously unreliable.

So Google, schools, and clients want to ensure you aren’t just publishing raw content without using your brain.

Like can you imagine if people were allowed to:

- Write about Your Money or Your Life (YMYL) topics without fact-checking.

- Publish journal articles where “peer-reviewed” no longer holds any value.

- Give generic AI-generated legal advice.

There won’t be any more trust.

That’s why you need to use these tools because people usually can’t tell the difference.

How AI Writing Detection Works

Alright, let’s dive a little deeper to see what’s really going on under the hood.

In a nutshell, there are many different ways these tools work.

But here are the two main concepts:

- Linguistic Analysis: Examining sentence structure to look for semantic meaning or repetition

- Comparative Analysis: Comparison with the training dataset, which looks for similarities with previously identified instances.

And these are some of the more common techniques used in training a model to detect AI content using the two concepts above.

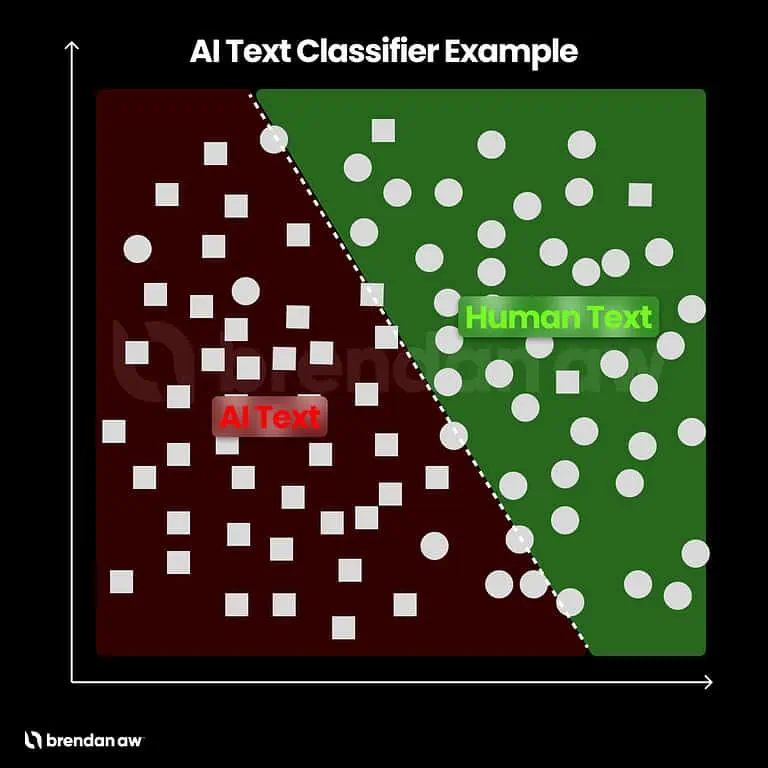

Classifiers: The Sorting Hat of AI Detection

Classifiers kinda operate like Harry Potter’s Sorting Hat, sorting data into predetermined classes.

Using machine or deep learning models, these classifiers examine features like word usage, grammar, style, and tone to differentiate between AI-generated and human-written texts.

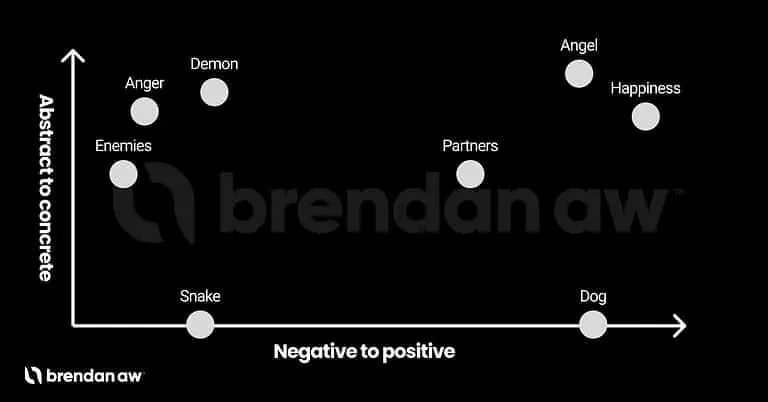

Picture a scatter plot where each data point is a text entry, and the features will form the axes.

Then let’s say we have two classes:

- AI Text

- Human Text

Whatever text you are testing will fall into one of these two clusters.

Here’s a graphic I made so you can visualize it.

The classifier’s job is to form a boundary to separate the 2.

Depending on what classifier model was used, some examples include:

- Logistic Regression

- Decision Trees

- Random Forest

- Support Vector Machines (SVM)

- K-Nearest Neighbors (KNN)

Note: You don’t need to know what these are, but just know they are algorithms that sort data in different ways.

That boundary might be a line, curve, or some other random shape.

And when you test a new text (data point), the classifier will simply put it in either one of those classes.

Embeddings: The DNA of Words

What if every word had its own covert code, as though we were watching some exciting spy flick?

In artificial intelligence (AI) and language comprehension, this is precisely what happens.

These codes are known as embeddings. Essentially unique DNA for individual words.

By capturing the core meaning behind each term and understanding how each relates to others in context, these embeddings form a semantic web of meaning.

This is done by representing each word as a vector in an Nth dimensional space and running some fancy computation. It can be 2D, 3D, or 302934809D.

Note: A vector is a quantity with both magnitude and direction. But for this explanation, just think of it as coordinates on a graph.

But why a vector?

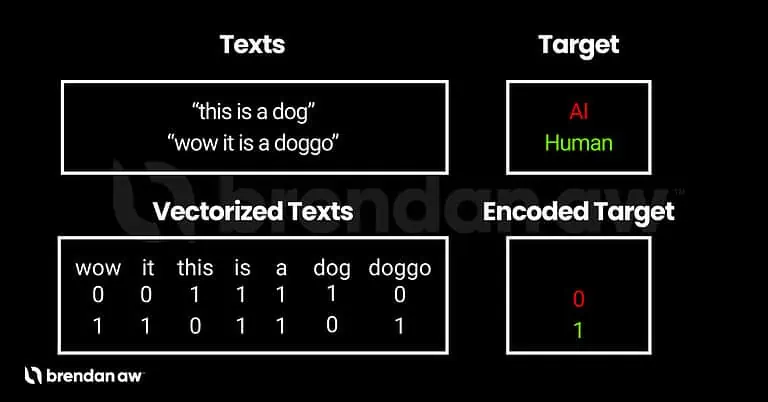

Because computers don’t understand words. SHOCKING. No serious.

That’s why it has to be converted into numbers first through vectorization.

Here’s an example of what it might look like in a table.

Note: Vectorized text numerical values can take on a wide range of values, not just binary ones (1 or 0). I just made it easier to visualize.

And here’s another 2D example of plotting vectors on a graph.

I’m sure you can imagine what 3D will look like, but don’t ask me to illustrate 4D. No human can tell you, but a computer can do it with the magic of MATH.

This is also exactly how Google operates. How do you think you can type something in the search bar and get scarily related results?

But what about telling apart text produced by humans versus that generated using AI?

We transform all text into their respective embeddings before feeding them to a machine-learning model to be trained.

Then, without even knowing any actual verbiage itself, it will form all these connections and figure out all the “codes” common with AI-generated text.

Cool huh?

Perplexity: The Litmus Test for AI-Generated Text

Perplexity is a measure of how well a probability distribution or a language model can predict a sample.

In the context of AI-generated content detection, perplexity acts as a litmus test for AI-generated text.

The lower the perplexity, the more likely the text is AI-generated.

It’s like a detective using a fingerprint match to identify a suspect.

Here’s a table breaking it down.

| Perplexity Level | Interpretation | Example |

|---|---|---|

| Low (close to 1) | The language model is highly confident in its predictions. It’s like a well-read book critic accurately predicting the next word in a novel. | A language model trained on medical literature predicting terms in a medical textbook. |

| Medium | The language model is somewhat confident in its predictions. It’s like a casual reader making some accurate and some inaccurate predictions about the next word in a novel. | A language model trained on general English literature predicting terms in a science fiction novel. |

| High | The language model is not confident in its predictions. It’s like a beginner reader trying to predict the next word in a complex philosophical text. | A language model trained on sports articles trying to predict terms in a legal document. |

Burstiness: The Telltale Sign of AI-Generated Text

Burstiness is the variation in the length and complexity of sentences generated by an AI model.

Picture yourself at a restaurant.

The place is filled with various conversations, some loud and boisterous, others quiet and intimate.

Just like those conversations, sentences written by humans tend to be unpredictable due to many nuances.

But AI models usually produce output that is more uniform in length and complexity, while human writing typically shows more significant variability or ‘burstiness’.

AI detectors can flag a text as potentially AI if it notices low variance in sentences: their lengths, structures, and tempos.

Here’s a table with some examples.

| Text Type | Example | Burstiness |

|---|---|---|

| Human-written | “I love going to the park. The fresh air, the sound of birds chirping, and the sight of children playing always lift my spirits. It’s a place where I can relax and unwind, away from the hustle and bustle of city life.” | High (Variation in sentence length and complexity) |

| AI-generated | “I like the park. It is nice. The air is fresh. There are birds. Children play there. It is relaxing.” | Low (Sentences are similar in length and complexity) |

Is AI Detection Accurate?

I’ll straight up tell you that it’s never 100% accurate, even if the score turns out to be 100%.

That’s just the model’s CONFIDENCE.

When an AI detector analyzes text, instead of simply deciding between human-written or AI-generated content, it typically calculates scores or probabilities for each classification based on particular features within said material.

For instance, let us assume we analyzed some text with our AI detector. It gave us scores of 0.7 for “AI” and 0.3 for “human.”

These numbers indicate that our detector has determined roughly a seventy-to-thirty chance (70% vs 30%) that our material falls under one category versus another.

Thus deciding ultimately whether one category does or doesn’t apply becomes quite easy.

Assigning probability measures rather than leaving things binary offers numerous benefits since it provides insight into how much trust you can place in predictions.

There’s more to determining whether a text was written by humans or artificially intelligent tools than just separating them into two categories: “human” and “AI.”

If the assessment method used involves calculating probability scores, then how close or far apart these scores are can impact an AI model’s certainty in making predictions.

For instance, if there isn’t much difference between the score assigned to an AI-generated piece and one written by humans (like getting scores of 0.51 and 0.49, respectively), then detecting their source will be more challenging compared to cases where their probabilities vary widely (e.g. getting probabilities of 0.9 versus 0.1).

So, despite yielding binary results, this decision involves detailed analysis relying heavily on the difference in probability scores.

That was quite a long paragraph, but that’s the best I can explain it.

Note: You might see other articles discussing how AI detectors work by calculating the likelihood of each word being the next predicted word or temperature. That refers to how AI writers work, not AI detectors. They got the search intent completely off.

What Is the Future of AI Content Detection?

As we witness, further evolution within artificial intelligence comes with an increased level of sophistication within machine-generated content, which presents a distinctive challenge towards detecting such content effectively.

Therefore all involved in its development process need to work on creating even more cutting-edge and accurate tools that can keep up.

Detecting false information created by AI accurately is vital for maintaining online information credibility, which will be the only way to counter these threats effectively.

Moreover, it is important that we pay close attention to ethical considerations surrounding privacy encroachment, consent violations, and the potential for misuse of this powerful technology.

Who Uses AI Detection?

Here are some groups that stand to benefit the most from using AI detection:

- Schools: Prevent students from abusing AI writing software via learning management systems.

- Businesses: Get rid of spam, fake reviews, or fake news.

- Law Enforcement Agencies: Eliminate criminal activities such as impersonation, identity fraud, and cyberbullying.

- Social Media Platforms: Remove bots and fake accounts that spread misinformation and propaganda.

- Media and Journalism Organizations: Identify false news and propaganda or even replace writers who rely on AI too much.

- Government Organizations: Eradicate disinformation campaigns and propaganda.

How Does AI Detection Work (FAQs)

Do AI Content Detection Tools Have Any Limitations or Shortcomings?

AI content detection tools do have some shortcomings and limitations. Their accuracy is not always perfect, as AI-generated content improves and becomes more indistinguishable from human-generated text.

Additionally, AI detectors may struggle to identify AI-generated content designed to be undetectable. Future developments in both AI-generated and detection technologies will determine the extent of these limitations.

Why Use AI Detection in SEO?

There is still an ongoing debate on with Google can detect AI-generated content despite having said in their most recent update that they no longer consider AI content spammy if it’s valuable. However, you never really know when or if they will actually penalize you. So most SEOs will still use AI detection to be safe.

To Sum Up

So I’ve covered everything you need to know about AI detection.

From why you need it, what really goes behind the scenes of training such a model, its accuracy, and the future of it.

I hope this helps you understand this topic slightly better.

If you liked it, please share this article and leave a comment below!

Like this article?

[wp_ulike for=”post” style=”wp-ulike-pin”]Share this article

About the author

Hi, I’m Brendan Aw. A creator, GTM engineer, and digital entrepreneur obsessed with building lean businesses from home. Professionally, I’ve led marketing for 7–8 figure startups in e-commerce, fintech, e-sports, retail, agencies and Web3. I hold a B.Com in Accounting & Finance from UNSW and a Data Science certification from Le Wagon. Now, I document my entrepreneurship journey online for myself and others.

Here are more resources for you:

- Read Baw Notes: My weekly letter for those building lean, or one-person businesses using systems, automation, and digital leverage.

- Read my blog: Explore tactical guides on automation, systems, monetization, growth, and solo strategy.

- Use my online business tool stack: Discover the exact tools I use to run my businesses.

Not Subscribed?

A weekly letter for those building lean and one-person digital-first businesses.